Modified memory architecture: the instruction and data scratchpad are controlled by the compiler based on the allocations generated by the memory allocation algorithm

Modified memory architecture: the instruction and data scratchpad are controlled by the compiler based on the allocations generated by the memory allocation algorithm

_____________________________________________________________________________________

Memory architecture describes the methods used to implement electronic

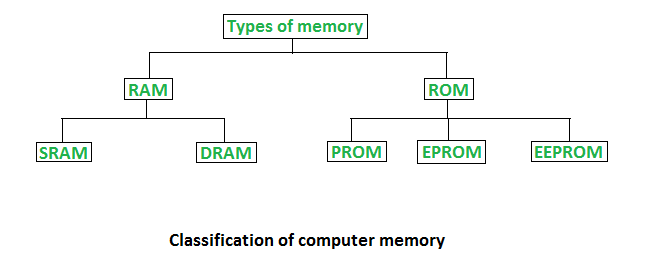

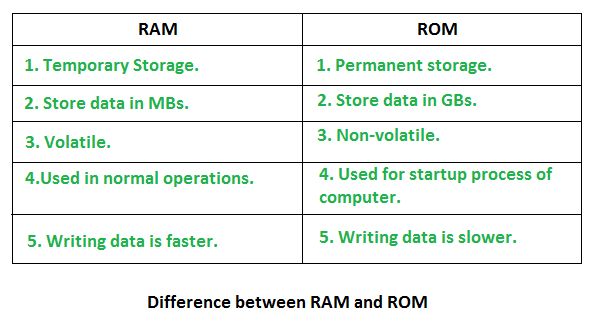

Memory is the most essential element of a computing system because without it computer can’t perform simple tasks. Computer memory is of two basic types – Primary memory(RAM and ROM) and Secondary memory (hard drive, CD, etc). Random Access Memory (RAM) is primary-volatile memory and Read-Only Memory (ROM) is primary-non-volatile memory.

Classification of Computer Memory

Random Access Memory (RAM) –

- It is also called read-write memory or the main memory or the primary memory.

- The programs and data that the CPU requires during the execution of a program are stored in this memory.

- It is a volatile memory as the data is lost when the power is turned off.

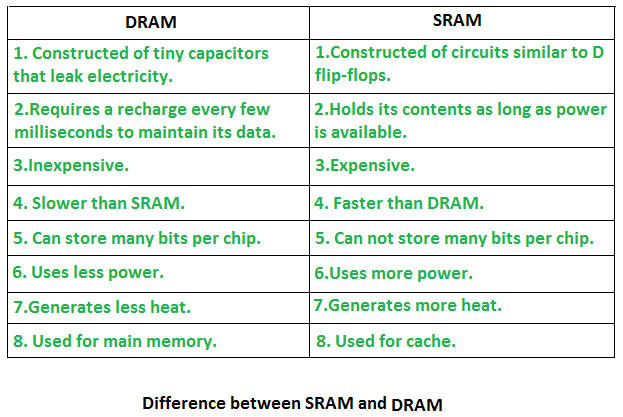

- RAM is further classified into two types- SRAM (Static Random Access Memory) and DRAM (Dynamic Random Access Memory).

Difference between SRAM and DRAM

Read-Only Memory (ROM)

- Stores crucial information essential to operate the system, like the program essential to boot the computer.

- It is not volatile.

- Always retains its data.

- Used in embedded systems or where the programming needs no change.

- Used in calculators and peripheral devices.

- ROM is further classified into four types- MROM, PROM, EPROM, and EEPROM.

Types of Read-Only Memory (ROM)

- PROM (Programmable read-only memory) – It can be programmed by the user. Once programmed, the data and instructions in it cannot be changed.

- EPROM (Erasable Programmable read-only memory) – It can be reprogrammed. To erase data from it, expose it to ultraviolet light. To reprogram it, erase all the previous data.

- EEPROM (Electrically erasable programmable read-only memory) – The data can be erased by applying an electric field, with no need for ultraviolet light. We can erase only portions of the chip.

- MROM(Mask ROM) – Mask ROM is a kind of read-only memory, that is masked off at the time of production. Like other types of ROM, mask ROM cannot enable the user to change the data stored in it. If it can, the process would be difficult or slow.

Difference between RAM and ROM

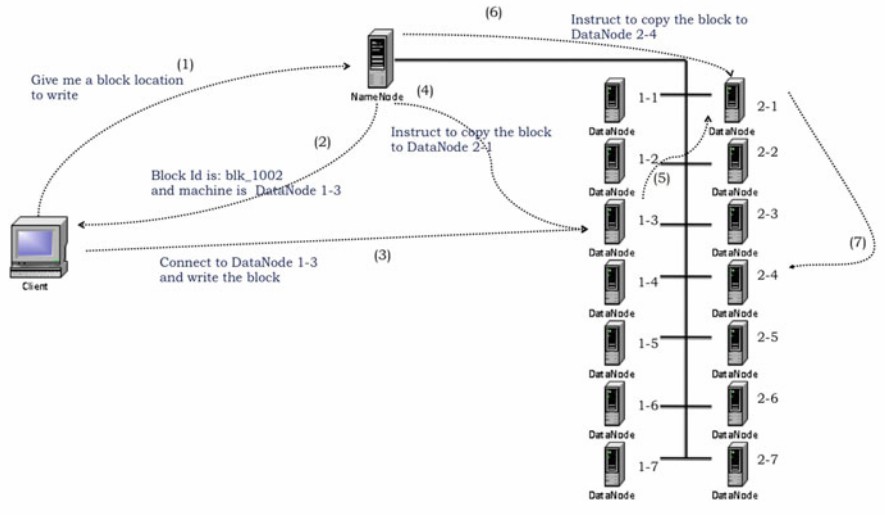

An application adds data to HDFS by creating a new file and writing the data to it. After the file is closed, the bytes written cannot be altered or removed except that new data can be added to the file by reopening the file for append. HDFS implements a single-writer, multiple-reader model.

The HDFS client that opens a file for writing is granted a lease for the file; no other client can write to the file. The writing client periodically renews the lease by sending a heartbeat to the NameNode. When the file is closed, the lease is revoked. The lease duration is bound by a soft limit and a hard limit. Until the soft limit expires, the writer is certain of exclusive access to the file. If the soft limit expires and the client fails to close the file or renew the lease, another client can preempt the lease. If after the hard limit expires (one hour) and the client has failed to renew the lease, HDFS assumes that the client has quit and will automatically close the file on behalf of the writer, and recover the lease. The writer’s lease does not prevent other clients from reading the file; a file may have many concurrent readers.

An HDFS file consists of blocks. When there is a need for a new block, the NameNode allocates a block with a unique block ID and determines a list of DataNodes to host replicas of the block. The DataNodes form a pipeline, the order of which minimizes the total network distance from the client to the last DataNode. Bytes are pushed to the pipeline as a sequence of packets. The bytes that an application writes first buffer at the client side. After a packet buffer is filled (typically 64 KB), the data are pushed to the pipeline. The next packet can be pushed to the pipeline before receiving the acknowledgment for the previous packets. The number of outstanding packets is limited by the outstanding packets window size of the client.

After data are written to an HDFS file, HDFS does not provide any guarantee that data are visible to a new reader until the file is closed. If a user application needs the visibility guarantee, it can explicitly call the hflush operation. Then the current packet is immediately pushed to the pipeline, and the hflush operation will wait until all DataNodes in the pipeline acknowledge the successful transmission of the packet. All data written before the hflush operation are then certain to be visible to readers.

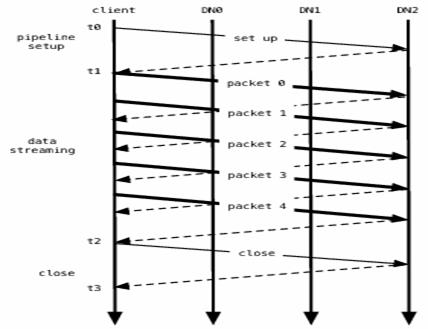

If no error occurs, block construction goes through three stages as shown in figure above illustrating a pipeline of three DataNodes (DN) and a block of five packets. In the picture, bold lines represent data packets, dashed lines represent acknowledgment messages, and thin lines represent control messages to setup and close the pipeline. Vertical lines represent activity at the client and the three DataNodes where time proceeds from top to bottom. From t0 to t1 is the pipeline setup stage. The interval t1 to t2 is the data streaming stage, where t1 is the time when the first data packet gets sent and t2 is the time that the acknowledgment to the last packet gets received. Here an hflush operation transmits packet 2. The hflush indication travels with the packet data and is not a separate operation. The final interval t2 to t3 is the pipeline close stage for this block.

In a cluster of thousands of nodes, failures of a node (most commonly storage faults) are daily occurrences. A replica stored on a DataNode may become corrupted because of faults in memory, disk, or network. HDFS generates and stores checksums for each data block of an HDFS file. Checksums are verified by the HDFS client while reading to help detect any corruption caused either by client, DataNodes, or network. When a client creates an HDFS file, it computes the checksum sequence for each block and sends it to a DataNode along with the data. A DataNode stores checksums in a metadata file separate from the block’s data file. When HDFS reads a file, each block’s data and checksums are shipped to the client. The client computes the checksum for the received data and verifies that the newly computed checksums matches the checksums it received. If not, the client notifies the NameNode of the corrupt replica and then fetches a different replica of the block from another DataNode.

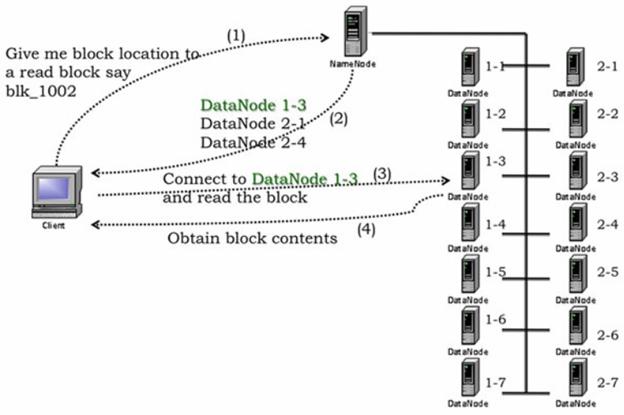

When a client opens a file to read, it fetches the list of blocks and the locations of each block replica from the NameNode. The locations of each block are ordered by their distance from the reader. When reading the content of a block, the client tries the closest replica first. If the read attempt fails, the client tries the next replica in sequence. A read may fail if the target DataNode is unavailable, the node no longer hosts a replica of the block, or the replica is found to be corrupt when checksums are tested.

HDFS permits a client to read a file that is open for writing. When reading a file open for writing, the length of the last block still being written is unknown to the NameNode. In this case, the client asks one of the replicas for the latest length before starting to read its content.

The design of HDFS I/O is particularly optimized for batch processing systems, like MapReduce, which require high throughput for sequential reads and writes. Ongoing efforts will improve read/write response time for applications that require real-time data streaming or random access.

Staging

A client request to create a file does not reach the NameNode immediately. In fact, initially the HDFS client caches the file data into a temporary local file. Application writes are transparently redirected to this temporary local file. When the local file accumulates data worth over one HDFS block size, the client contacts the NameNode. The NameNode inserts the file name into the file system hierarchy and allocates a data block for it. The NameNode responds to the client request with the identity of the DataNode and the destination data block. Then the client flushes the block of data from the local temporary file to the specified DataNode. When a file is closed, the remaining un-flushed data in the temporary local file is transferred to the DataNode. The client then tells the NameNode that the file is closed. At this point, the NameNode commits the file creation operation into a persistent store. If the NameNode dies before the file is closed, the file is lost.

The above approach has been adopted after careful consideration of target applications that run on HDFS. These applications need streaming writes to files. If a client writes to a remote file directly without any client side buffering, the network speed and the congestion in the network impacts throughput considerably. This approach is not without precedent. Earlier distributed file systems, e.g. AFS, have used client side caching to improve performance. A POSIX requirement has been relaxed to achieve higher performance of data uploads.

HDFS Read workflow

- To start the file read operation, client opens the required file by calling open() on Filesystem object which is an instance of DistributedFileSystem. Open method initiate HDFS client for the read request.

- DistributedFileSystem interacts with Namenode to get the block locations of file to be read. Block locations are stored in metadata of namenode. For each block, Namenode returns the sorted address of Datanode that holds the copy of that block. Here sorting is done based on the proximity of Datanode with respect to Namenode, picking up the nearest Datanode first.

- DistributedFileSystem returns an FSDataInputStream, which is an input stream to support file seeks to the client. FSDataInputStream uses a wrapper DFSInputStream to manage I/O operations over Namenode and Datanode. Following steps are performed in read operation.

- Client calls read() on DFSInputStream. DFSInputStream holds the list of address of block locations on Datanode for the first few blocks of the file. It then locates the first block on closest Datanode and connects to it.

- Block reader gets initialized on target Block/Datanode along with block ID, data start offset to read from, length of data to read and client name.

- Data is streamed from the Datanode back to the client in form of packets, this data is copied directly to input buffer provided by client.DFS client is reading and performing checksum operation and updating the client buffer.

- Read () is called repeatedly on stream till the end of block is reached. When end of block is reached DFSInputStream will close the connection to Datanode and search next closest Datanode to read the block from it.

- Blocks are read in order, once DFSInputStream done through reading of the first few blocks, it calls the Namenode to retrieve Datanode locations for the next batch of blocks.

- When client has finished reading it will call Close() on FSDataInputStream to close the connection.

- If Datanode is down during reading or DFSInputStream encounters an error during communication, DFSInputStream will switch to next available Datanode where replica can be found. DFSInputStream remembers the Datanode which encountered an error so that it does not retry them for later blocks.

The client, with the help of Namenode gets the list of best Datanode for each block and communicates directly with Datanode to retrieve the data. Here Namenode serves the address of block location on Datanode rather than serving data itself which could become the bottleneck as the number of clients grows. This design allows HDFS to scale up to a large numbers of clients since the data traffic is spread across all the Datanodes of clusters.

HDFS Write workflow

- The client creates the file by calling create() method on DistributedFileSystem.

- DistributedFileSystem makes an RPC call to the namenode to create a new file in the filesystem’s namespace, with no blocks associated with it.

The namenode performs various checks to make sure the file doesn’t already exist and that the client has the right permissions to create the file. If these checks pass, the namenode makes a record of the new file; otherwise, file creation fails and the client is thrown an IOException. TheDistributedFileSystem returns an FSDataOutputStream for client to start writing data to.

- As the client writes data, DFSOutputStream splits it into packets, which it writes to an internal queue, called the data queue. The data queue is consumed by the DataStreamer, which is responsible for asking the namenode to allocate new blocks by picking a list of suitable datanodes to store the replicas. The list of datanodes forms a pipeline, and here we’ll assume the replication level is three, so there are three nodes in the pipeline. TheDataStreamer streams the packets to the first datanode in the pipeline, which stores the packet and forwards it to the second datanode in the pipeline.

- Similarly, the second datanode stores the packet and forwards it to the third (and last) datanode in the pipeline.

- DFSOutputStream also maintains an internal queue of packets that are waiting to be acknowledged by datanodes, called the ack queue. A packet is removed from the ack queue only when it has been acknowledged by all the datanodes in the pipeline.

- When the client has finished writing data, it calls close() on the stream.

- This action flushes all the remaining packets to the datanode pipeline and waits for acknowledgments before contacting the namenode to signal that the file is complete The namenode already knows which blocks the file is made up of , so it only has to wait for blocks to be minimally replicated before returning successfully.